Srikantan Sankaran 7/10/2017 6:19:57 AM

With Azure Site Recovery (ASR) it is now possible to orchestrate fail over of workloads like Web Applications running Azure Virtual Machines from a Primary Site to a DR Site. Using Recovery Plans and Runbook Automation, one could script specific configuration actions on the Compute resources that have been recovered by ASR, and bring the Application live on the DR Site. Recovery Services Vault in ASR also provides the ability to simulate and test a Fail over scenario.

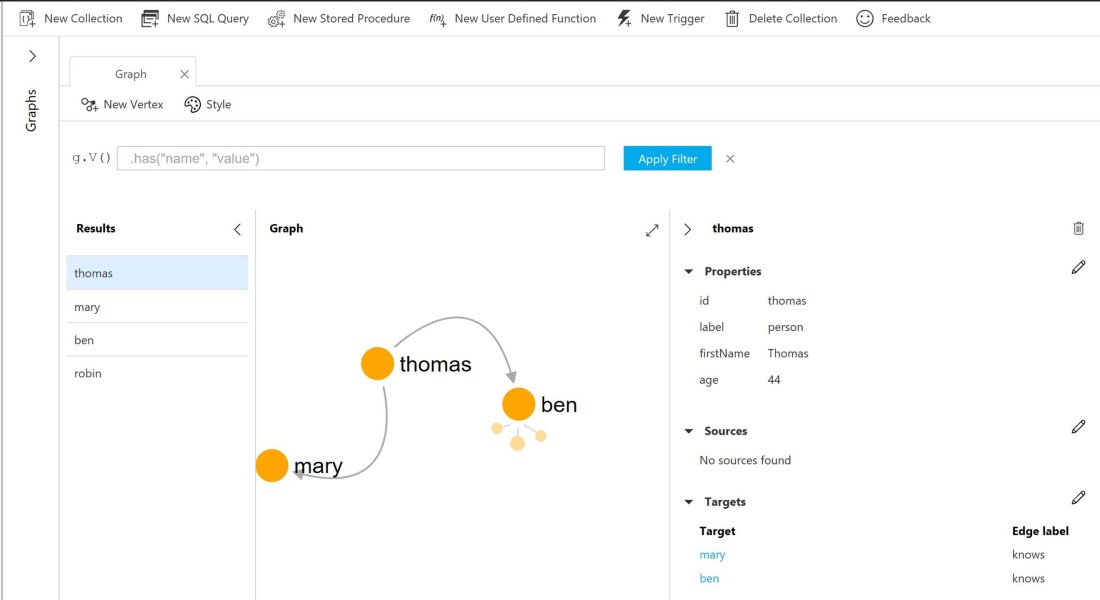

Covered here is a scenario where a 2 tier web application, comprising an ASP.NET Forms Application running in an Azure Virtual Machine, connecting to SQL Server 2014 database in another Virtual Machine, are replicated using ASR to another Azure Datacenter. The steps that are to be performed to complete the replication of the Application to the DR Site, and testing the fail over are broadly as under:

- From the Azure Portal, create a Recovery Services Vault in another Azure Data Center, and add the 2 Virtual Machines running in the Primary Data center, as the items to be protected in it.

- Enable replication. The Web Server and Database Server VMs would be replicated to the target Data Center

The steps to perform these actions are documented here

As an outcome from the above step, the Virtual Machines images get replicated to the DR Site, and the VNet and Subnet settings mirrored from their counterparts in the Primary Data Center. However, Public IP Address resources that are configured for the Web Server, and the NSG Rules in the Primary Data center are not replicated. These resources need to be added and configured in the DR Site for the Application to be available and accessible. Also, the connection string in the Web.config of the ASP.NET Application needs to be updated with the Internal IP Address of the VM running SQL Server in the DR Site. These configuration steps can be implemented using Recovery Plans, that use Runbook Automation invoked PowerShell scripts.

(Note that no VMs are created in the DR Site yet, but only their images replicated to the DR Site. These images can be spun off into VMs when the fail over happens)

Azure Documentation related to Recovery Plans is available here

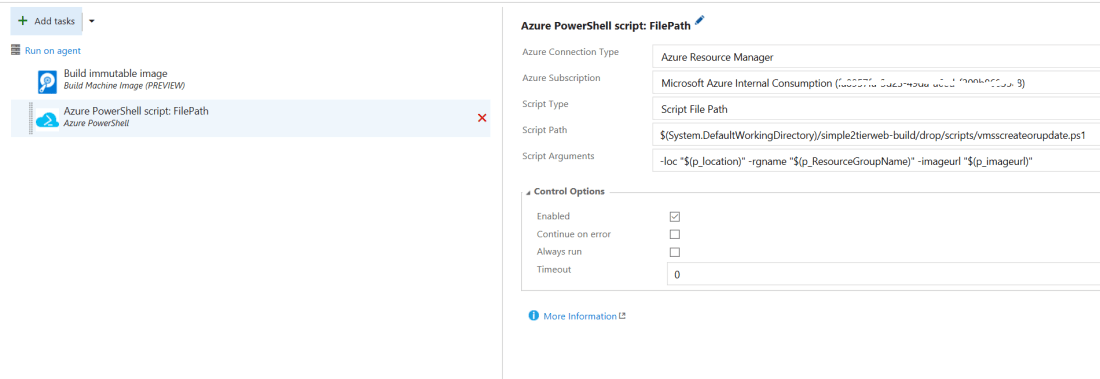

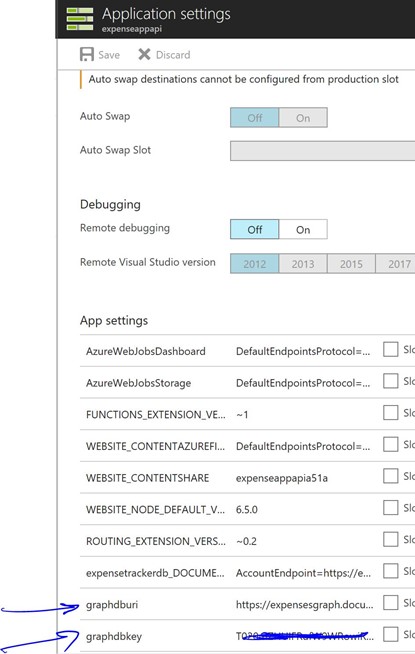

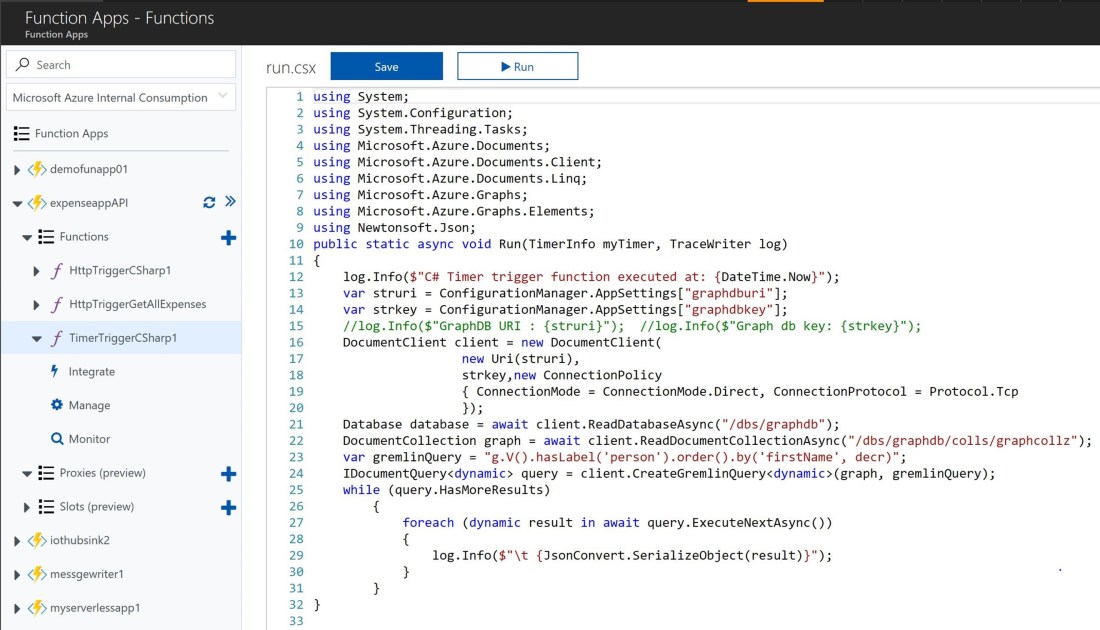

3. Create an Automation Services Account in the Target Data Center (Azure DR Site) and add to it the 2 Runbook scripts provided with this article. Download the scripts from the github location here.

- Copy & paste the powershell scripts into the 2 Runbooks – the ones referred in this article are SetSqlVmIp.ps1 and asraddpublicip.ps1 – with the same name as the .ps1 files themselves

- Upload the third PowerShell Script file, UpdateWebConfigRemote.ps1, to Azure Blob Storage, and obtain its public endpoint URL. This would be required later.

- In the Azure portal, for this Automation Account, create a variable that would store the Private IP Address of the Azure VM running the SQL Server database. In the scenario described here, the Variable name used is PrivateIpSqlDb

There are some changes to be made in the script after the above steps are performed.

- In the Runbook asraddpublicip, change the values of the following variables, based on the Automation Account and the Automation Account variable name created above.

$AutomationVariableName = ‘PrivateIpSqlDb’

$AutomationAccountName = ‘asrdraccount’

Also, replace the FileUri parameter for the UpdateWebConfigRemote.ps1 uploaded to Blob Storage, in the PowerShell command below.

Set-AzureRmVMCustomScriptExtension -ResourceGroupName $VM.ResourceGroupName `

-VMName $VM.RoleName `

-Name “myCustomScript” `

-FileUri “https://asrdrscriptstore.blob.core.windows.net/scripts/UpdateWebConfigRemote.ps1” `

-Run “UpdateWebConfigRemote.ps1” -Argument $scriptarguments -Location $VMData.Location

- In the Runbook SetSqlVmIp, change the values of the following variables, based on the Automation Account and Automation Account Variable name used in it, similar to the asraddpublicip Runbook above

$AutomationVariableName = ‘PrivateIpSqlDb’

$AutomationAccountName = ‘asrdraccount’

- In the PowerShell Script UpdateWebConfigRemote.ps1, there are certain values that are hardcoded in it. While these can be parameterized to make them more generic, for simplicity here, they are retained as they are.

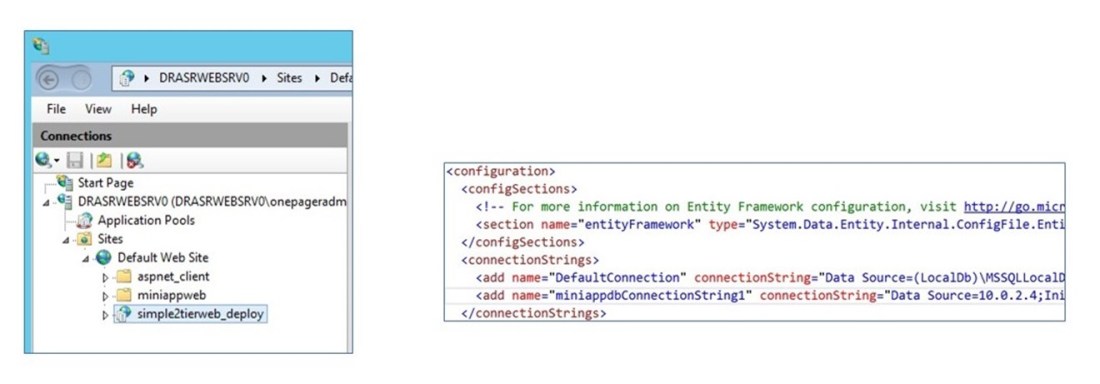

– The Web Application name used is simple2tierweb_deploy, and it is added as an Application within the ‘Default Web Site’ on IIS on the Web Server. See the screen shot below

– The script looks for a Connection String section with the name miniappdbConnectionString1 within which it replaces the IP Address of the SQL Server VM from the Primary Data Center with that in the DR Site, during failover.

Note: To execute the above Runbooks, the Automation Service requires certain Modules to be available. Import them into the Automation Account in case they are not. Refer to this link for more details on these Modules – the names are:

AzureRM.profile

AzureRM.Resources

AzureRM.Automation

AzureRM.Network

AzureRM.Compute

4. In the Azure Portal, Select the Recovery Services Vault that contains the VMs that were protected in the steps performed earlier, and Add a Recovery Plan. Refer to this link for details on how to create Recovery Plans and this link for details on how to add Runbooks to Recovery Plans.

In the context of the scenario covered in this article, the Recovery Plan would resemble the below after configuration

— Two separate Fail over Groups are created, Group 1 comprising the SQL Server VM, and Group 2 comprising the Web Server VM, in that sequence

— Add a post step to Group 1 and add the Runbook SetSqlVmIp to it. As an outcome of this action, the Internal IP of the SQL Server VM that fails over is retrieved and stored in the Automation Variable PrivateIpSqlDb

— Add a post step to Group 2 and add the Runbook asraddpublicip to it. As an outcome of this step, two key actions are performed :

> a PublicIP resource is created and linked to the Network interface card of the Web Server VM.

> The custom script extension is used to execute PowerShell script UpdateWebConfigRemote.ps1 that is stored in Azure Storage. This extension is used to execute this script remotely on the Web Server VM, which retrieves the value of the PrivateIpSqlDb from the Automation variable, replaces the IP Address in the web.config file of the ASP.NET Web Application with this value.

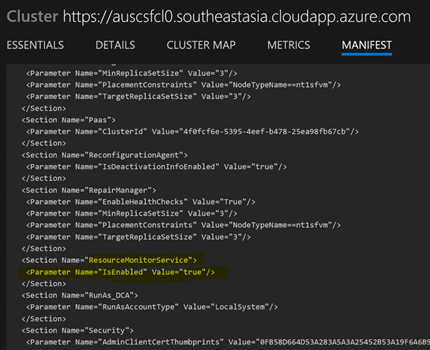

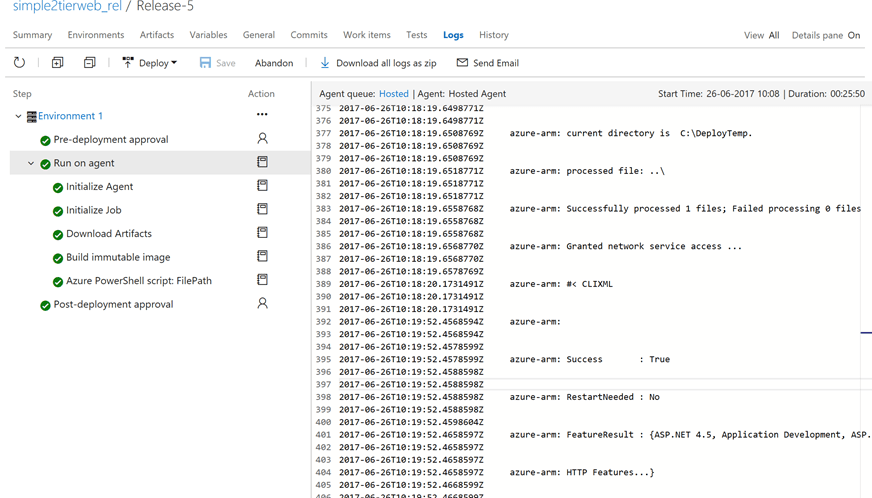

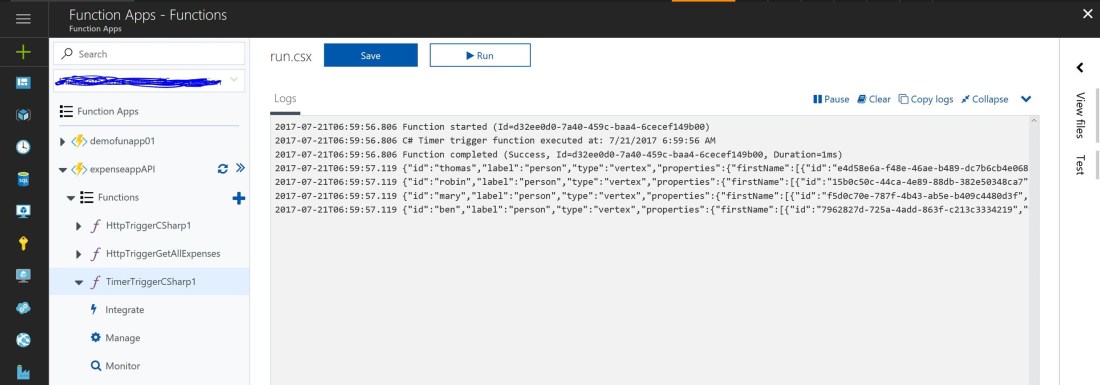

5. From the Azure Portal, choose the Recovery Services Vault, select the Recovery Plan and trigger a Test fail over. Once the fail over completes, from the Resource Group into which the fail over happens, notice that the Web Server and Database Server VMs are created, the Web Server is added to an Availability Set, a PublicIP Resource is added which is assigned to the Web Server VM. See screen shot below

Launch the URL of the Web Application, using the Public IP Address from the the failed over site , as shown above, and you should see the ASP.NET Application working in the DR Site. Shown below is the Web Application and it shows the data obtained from the SQL Server database running in the other VM.

References – I have used the guidance available in the following links and repurposed the scripts in them to implement the scenario in thia article

Runbook Automation – Recovery Plan scripts in technet – here

GitHub Quick Start Template – here